Following last month’s AI webinar on the Rise of AI, October saw the second instalment of our AI webinar series: Unpacking the AI Suitcase. Our expert speakers unpacked Artificial Intelligence as a term to get to the bottom of what it really means.

Joining CEO.digital’s Craig McCartney were:

- David Skerrett, Digital Transformation Leader & All Things AI Webinar Chair

- Mark Diderich Brill, Professor & Business Expert

AI is a complex term, covering a lot of bases like Machine Learning and Deep Learning that could confuse the average user. David skilfully dissected the term and what it means today. Then, Mark took over the discussion to address the ethics of AI and what its introduction means for society.

Finally, our audience had the chance to voice their views on AI in our Good, Bad or Ugly? segment, where the unending debate over whether pineapple on pizza is acceptable also reared its ugly(?) head… The audience cast its vote and, we’re sorry to say, it isn’t!

Here’s a taster of what we got up to during the webinar.

Unbundling Artificial Intelligence

Back in September, we looked at the Rise of AI and series chair David Skerrett introduced viewers to the history of AI and the way it has evolved since it first came to life in the 1950s. But in this webinar, the focus shifted to what Artificial Intelligence actually means.

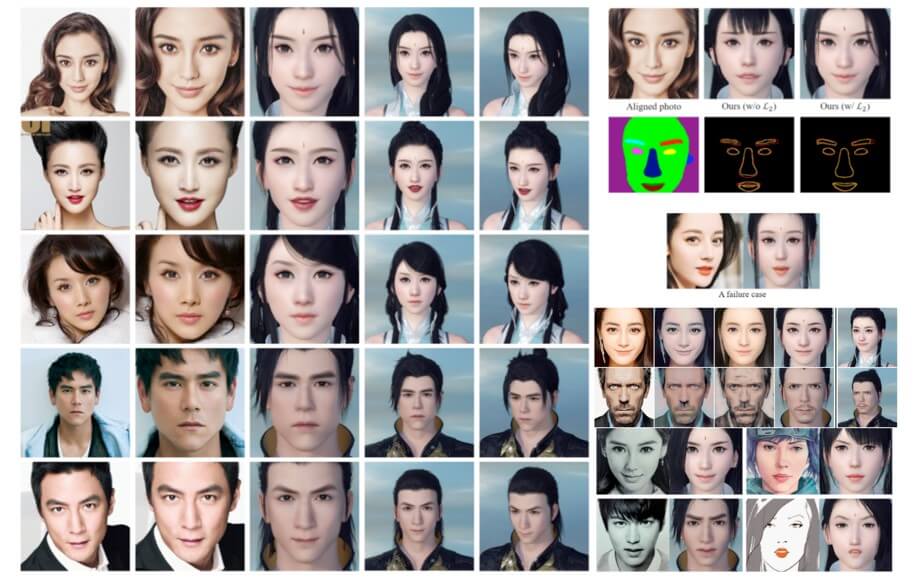

For David, AI is a constellation of technology – hardware and software that includes Machine Learning and Natural Language Processing – that allows machines to sense, comprehend and learn. Before, David described AI as a kind of scotch egg, with AI as the enclosing term and Machine Learning and Deep Learning the two central ingredients. But in this session, David expanded on his initial idea to show that AI is like a family tree of technologies.

There are two core branches of AI in existence: Narrow/Weak AI and General/Strong AI. Narrow AI is an non-biological intelligence that is able to complete a single task, and do so well. For the most part, that’s where AI is today.

The other type of AI is General AI. These stronger AIs will be able to achieve any human task. Steve Wozniak, Apple co-founder, proposed a deceptively simple test to see if an AI was truly intelligent. If it could walk into any house and make a good cup of coffee, that AI would be a General (or Strong) AI, able to complete average human tasks.

While that may sound an easy task for a human to achieve, for an AI it is notoriously difficult. To make a good cup of coffee, you draw on a wealth of knowledge that you take for granted, such as:

- Knowing where to find a kitchen and what a kitchen looks like

- What coffee is and what it looks like

- How to work a kettle… and what a kettle is

- How much coffee to put in the cup to make sure it tastes nice – not too strong, not too weak

And so on. When you break the coffee-making process down, you realise that there’s a lot of different elements at play. But while you could program a machine to make a cup of coffee in one house, you would need to program it completely differently to make a coffee in another house. A Strong AI wouldn’t need this additional input. It would be able to work out everything it needed to make a good cup of coffee regardless of environment.

So while Strong AI is right around the corner, there is another type of AI that will come in future decades: Super AI. This final type of AI will have an intelligence that vastly exceeds our own, but David argues that this won’t come for some time.

However, right now the IQ of AI has a long way to go. David was keen to point out that in 2014 the average human’s IQ at 18 years old was 97, while the 2016 IQ of the world’s biggest AI systems was 47.28 at its highest. For perspective, a 6-year-old human being has an average IQ of 55.5.

We haven’t incorporated human-like intelligence into machines yet, but we’re on our way – and businesses know it. David highlighted that companies who fully incorporate AI tools in their organisation could increase their economic value by 120% by 2030 (McKinsey). He also pointed out PwC’s claim that AI will contribute $15.7 trillion to the global economy by 2030.

David had plenty more statistics to share with our audience, but to find out more and how to best position your company to adopt AI, download the full webinar free from our website.

AI Ethics with Mark Brill

David ended his section by talking about the ethics behind current company use of AI. Too many companies are claiming to use AI when in reality they are playing a kind of ‘Wizard of Oz’ with technology. Chat bots are a particular problem. Users think they are talking to a robot, but all too often they are talking to a real person pretending to be a bot.

That’s a lack of transparency that is toxifying the debate around AI. But there are even more issues to contend with.

Mark started off his part of the webinar talking about what role AI has to play in the human endeavour, from creativity to journalism, facial recognition to morality.

His first subject was creativity. In 2018, a painting called the Portrait of Edmond Belamy sold at Christies for €400,000. The catch? It was created by an AI neural network by the French art collective Obvious. But while Obvious claimed that the painting was theirs, the fact it was made by an AI raises questions about who really is responsible. Is it the art collective who told the AI to paint, or the creator of the neural network itself? Also, can we consider this art if no human emotion played a role in its creation?

In journalism, we find another ethical dilemma. Satoshi is an AI trained by journalists to write articles on bitcoin for The New Web. Using a selection of stock phrases about bitcoin and cryptocurrencies, Satoshi trawls the internet looking for newsworthy content. It then writes and posts articles about bitcoin. Usually, Satoshi’s articles are shorter, freeing journalists to write longer analytical content.

The trouble is that the bot, on occasion, can outperform human writers in terms of traffic and hits. What impact will this have on employees in the long run? And what is the measure of performance? If it is simple hits and traffic, is that as valuable as in-depth analytical content?

Later, Mark went on to discuss the introduction of self-driving cars. At the moment, over a million people a year are killed by cars, but we still drive. Self-driving cars might reduce the number of deaths per year, but there is a trust deficit in AI. We feel safer knowing that a human being is driving, even though statistically speaking they are more dangerous.

Now, what happens if you are in a position where your self-driving car is going to hit a group of five nuns? Does the AI hit the nuns accidentally, or does it swerve and drive you into a wall, killing you but saving the five nuns? Who does the AI have most responsibility to save?

Our audience voted on this very question and we were split 50/50. What do you think?

Regardless of your view, Mark was keen to point out that once you start to think about AI ethically, you can start to rethink how we live. For example, if you have self-driving cars, do you need car ownership? Wouldn’t it make more sense to share cars that can go away and drive themselves, pick up users and drop them off autonomously, even refuel themselves?

The possibilities and opportunities that AI will unlock are endless. But all the while, we will need to think ethically about the impact AI will have on society.

The Good, the Bad and the Ugly

As in the previous webinar, we concluded the session by opening the floor to our audience. We asked them to judge a selection of AI use cases and class them as good, bad or ugly. The examples and results are below!

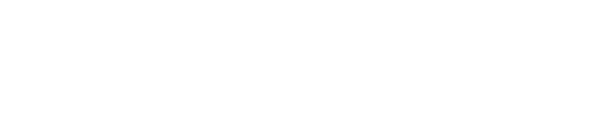

ImageNet Roulette

ImageNet Roulette is an art project initiated by the artist Trevor Paglen and an AI researcher, Kate Crawford. Its aim is to expose how systemic biases have been passed onto machines through the humans who trained their algorithms. The images are instantly deleted once the analysis is given to the users and no data is collected. It’s a tool that shows the flaws in the most widely used image recognition database.

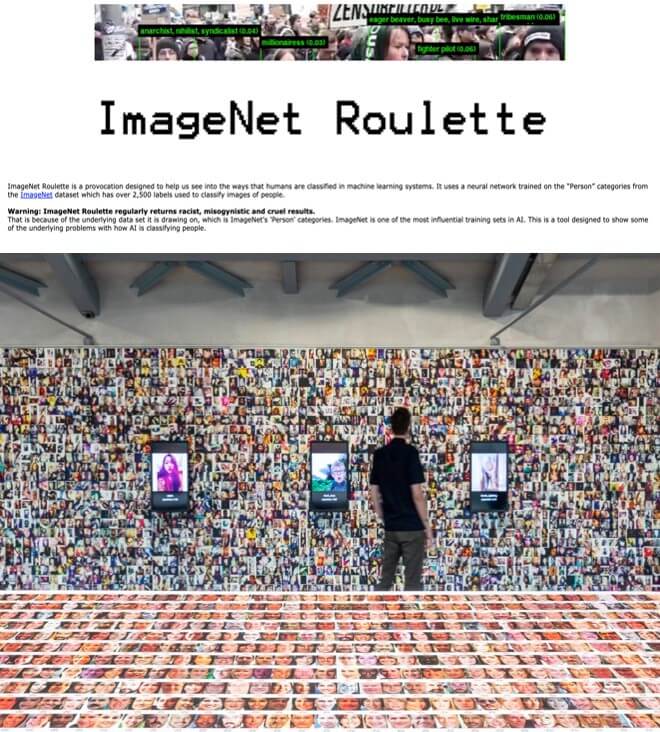

AI Turns Selfies into Video Game Characters

This AI uses facial capture technology to generate an avatar for gamers based on their own facial features. The team behind this AI focused on bone structure, unlike other 3-D modelling tools. The result is a more realistic representation of the user in-game. It has the potential to speed up player onboarding by matching how players typically design their characters.

Pizza-Making AI

The sharp minds at MIT aren’t only solving self-driving car problems… They’re also focusing on pizza! After training a neural network called PizzaGAN on thousands of pizza pictures, this AI knows not only how to identify individual toppings but how to distinguish their layers and the order in which they need to appear. From there, the system can create step-by-step guides for making pizza using only one example photo as a starting point. There is great potential here for visual problem solving, but as of right now the AI is only accurate 88% of the time with two toppings – the rating drops the more toppings there are. The AI also can’t cook pizza on its own…

Facial Recognition for Animals

Facial recognition has been getting a bad rep for the ways it could be used to infringe civil liberties. But outside the human realm, researchers at Oxford have discovered a new, rather fitting purpose for the technology: to aid them in monitoring the behaviours and interactions of chimpanzees. Using roughly 50 hours of footage taken over 14 years, the scientists extracted 10 million face images of 23 chimps and fed them into a deep neural network. The model was able to identify individuals with 93% accuracy and was four times better at novices.

AI-Powered Interviewing & Recruiting

Corporations are starting to use AI to screen candidates. It works by analysing the language, tone and facial expressions of candidates when they are asked a set of identical interview questions they film on their mobile phone or laptop. The algorithms select the best applicants by assessing their performance against around 25,000 pieces of facial and linguistic data from previous interviews of those who have gone on to excel at their job. Unilever are using the technology currently, but Amazon recently abandoned its use of AI recruitment tools because it was eliminating all female candidates.

Self-Driving Suitcases

Self-driving technology usually gets pigeon-holed with cars, but the Ovis Suitcase is bringing self-driving to your luggage. The suitcase can follow its owner using computer vision technology that lets it avoid obstacles and follow obediently. It demonstrates how AI, low-cost sensors and powerful small computing can drive forwards the next generation of autonomous ‘vehicles’. A group of Chinese students, meanwhile, have developed a self-driving bicycle…